Architecture

DirectPV is implemented according to the CSI specification. It comes with the below components run as Pods in Kubernetes:

ControllerNode server

When DirectPV contains legacy volumes from DirectCSI, the following additional components also run as Pods:

Legacy controllerLegacy node server

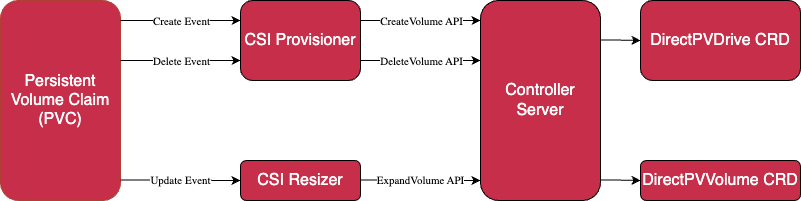

Controller

The Controller runs as Deployment Pods named controller.

The DirectPV controller has three replicas.

The replicas elect one instance to serve requests.

Each pod has the following containers:

-

ControllerHonors CSI requests to create, delete and expand volumes.

-

CSI provisionerBridges volume creation and deletion requests from a

Persistent Volume Claimto the CSI controller. -

CSI resizerBridges volume expansion requests from

Persistent Volume Claimto CSI controller.

Controller server

The controller server runs as a container named controller in a controller Deployment Pod.

It handles the following requests:

-

Create volumeThe controller server creates a new

DirectPVVolumeCRD after reversing requested storage space on a suitableDirectPVDriveCRD. For more information, refer to the Volume scheduling guide. -

Delete volumeThe controller server deletes an existing

DirectPVVolumeCRD for unbound volumes after releasing previously reserved space in aDirectPVDriveCRD. -

Expand volumeThe controller server expands an existing

DirectPVVolumeCRD after reversing requested storage space in aDirectPVDriveCRD.

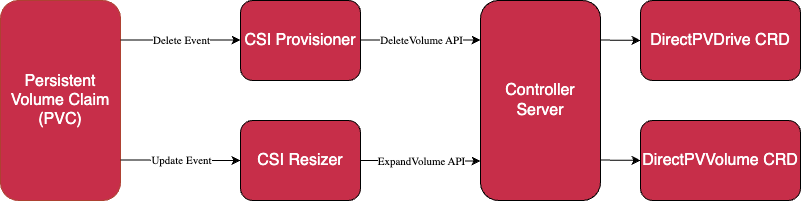

Legacy controller

The Legacy controller runs as Deployment Pods named legacy-controller.

The Legacy controller has three replicas located in any Kubernetes nodes.

The replicas elect one instance to serve requests.

Each pod has the following containers:

-

CSI provisionerBridges legacy volume creation and deletion requests from

Persistent Volume Claimto the CSI controller. -

ControllerHonors CSI requests to delete and expand volumes only. Create volume requests are prohibited. The legacy controller works only for legacy volumes previously created in

DirectCSI. -

CSI resizerBridges legacy volume expansion requests from

Persistent Volume Claimto the CSI controller.

Legacy controller server

The Legacy controller server runs as a container controller in a legacy-controller Deployment Pod.

It handles the following requests:

-

Create volumeThe Controller server returns an error for this request. DirectPV does not create new legacy DirectCSI volumes.

-

Delete volumeThe Controller server deletes the

DirectPVVolumeCRD for unbound volumes after releasing previously reserved space in theDirectPVDriveCRD. -

Expand volumeThe Controller server expands the

DirectPVVolumeCRD after reversing requested storage space in theDirectPVDriveCRD.

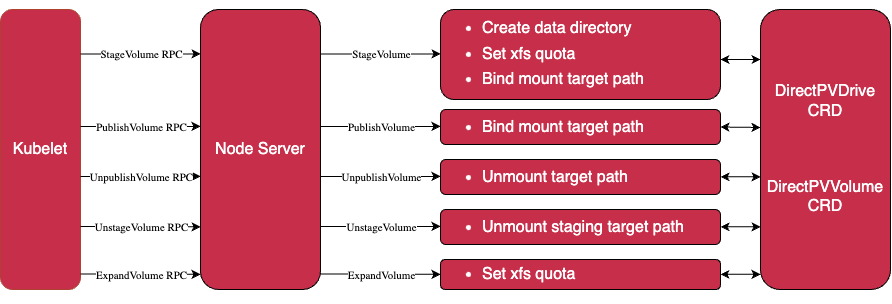

Node server

The node server runs as DaemonSet Pods named node-server in all or selected Kubernetes nodes.

Each node server Pod runs on a node independently.

Each pod has the following containers:

-

Node driver registrarRegisters node server to kubelet to get CSI RPC calls.

-

Node serverHonors

stage,unstage,publish,unpublishandexpandvolume RPC requests. -

Node controllerHonors CRD events from

DirectPVDrive,DirectPVVolume,DirectPVNodeandDirectPVInitRequest. -

Liveness probeExposes a

/healthzendpoint to check node server liveness by Kubernetes.

Legacy node server

The legacy node server runs as DaemonSet Pods named legacy-node-server in all or selected Kubernetes nodes.

Each legacy node server Pod runs on a node independently.

Each pod contains the below running containers:

-

Node driver registrarRegisters legacy node server to kubelet to get CSI RPC calls.

-

Node serverHonors

stage,unstage,publish,unpublishandexpandvolume RPC requests. -

Liveness probeExposes

/healthzendpoint to check legacy node server liveness by Kubernetes.