It is useful to discover, format, mount, schedule and monitor drives across servers. Since Kubernetes hostPath and local PVs are statically provisioned and limited in functionality, DirectPV was created to address this limitation.

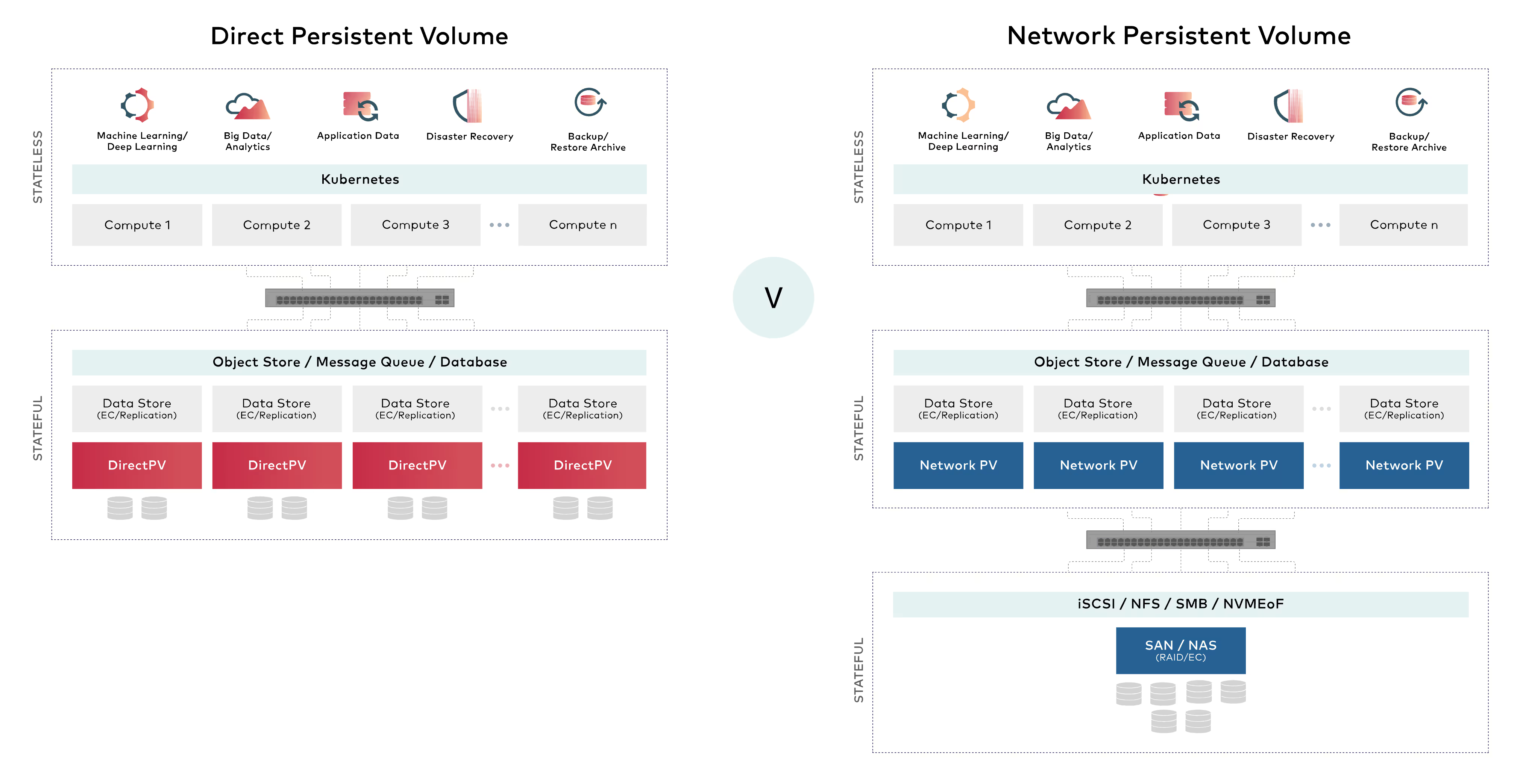

Distributed data stores such as object storage, databases and message queues are designed for direct attached storage, and they handle high availability and data durability by themselves. Running them on traditional SAN or NAS based CSI drivers (Network PV) adds yet another layer of replication/erasure coding and extra network hops in the data path. This additional layer of disaggregation results in increased-complexity and poor performance.

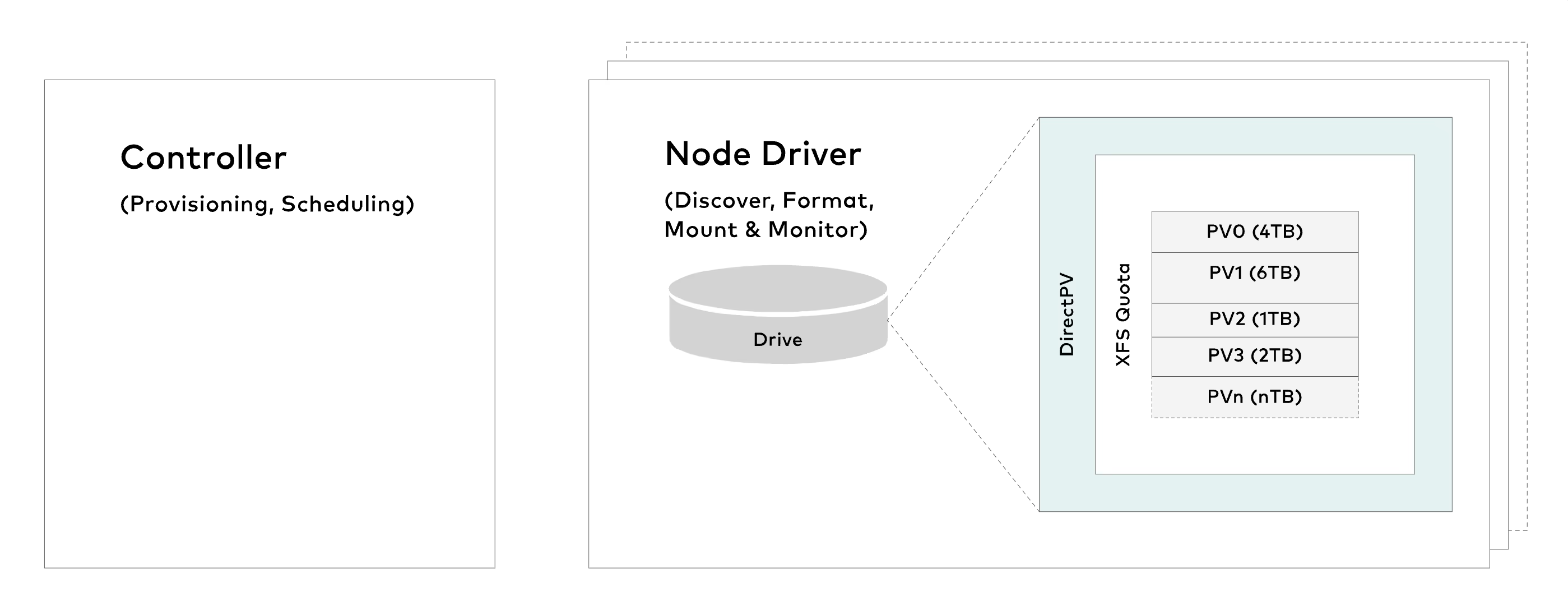

DirectPV is designed to be lightweight and scalable to tens of thousands of drives. It is made up of three components - Controller, Node Driver, UI.

When a volume claim is made, the controller provisions volumes uniformly from a pool free drives. DirectPV is aware of pod's affinity constraints, and allocates volumes from drives local to pods. Note that only one active instance of controller runs per cluster.

Node Driver implements the volume management functions such as discovery, format, mount, and monitoring of drives on the nodes. One instance of node driver runs on each of the storage servers.

Storage Administrators can use the kubectl CLI plugin to select, manage and monitor drives. Web based UI is currently under development.

Firstly, it is required to uninstall older version of DirectPV. Once it is uninstalled, follow Installation instructions ↗ to install the latest DirectPV. In this process, all existing drives and volumes will be migrated automatically.

Refer the following steps for upgrading DirectPV using krew.

For migrating from older versions < v3.2.0, Please refer the upgrade guide ↗.

Please review the security checklist before deploying to production.

Important: Report security issues to security@min.io ↗. Please do not report security issues here.

DirectPV is a MinIO project. You can contact the authors over the Slack channel:

DirectPV is released under GNU AGPLv3 license. Please refer to the LICENSE document for a complete copy of the license.